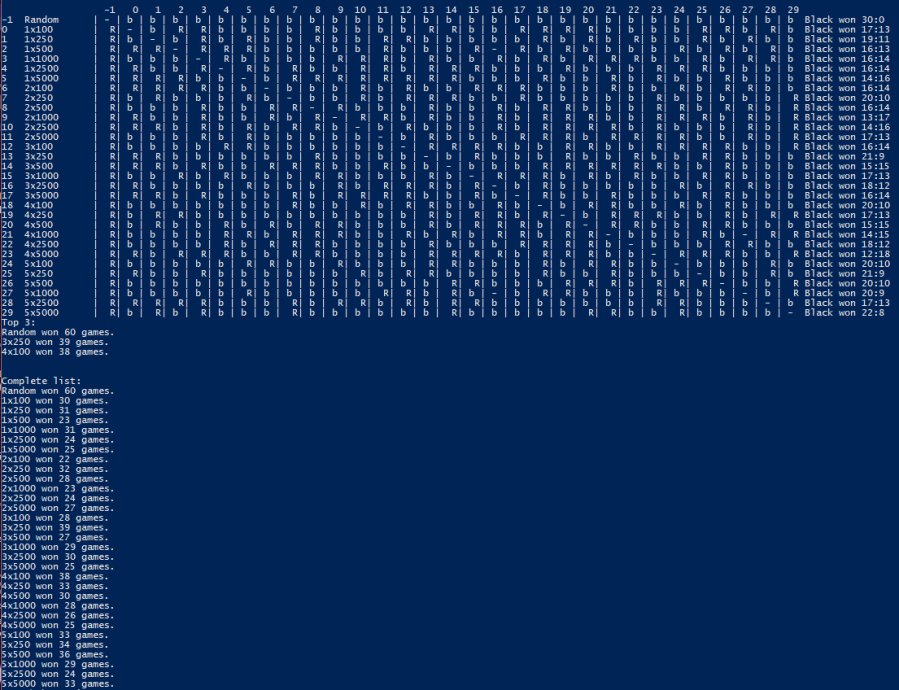

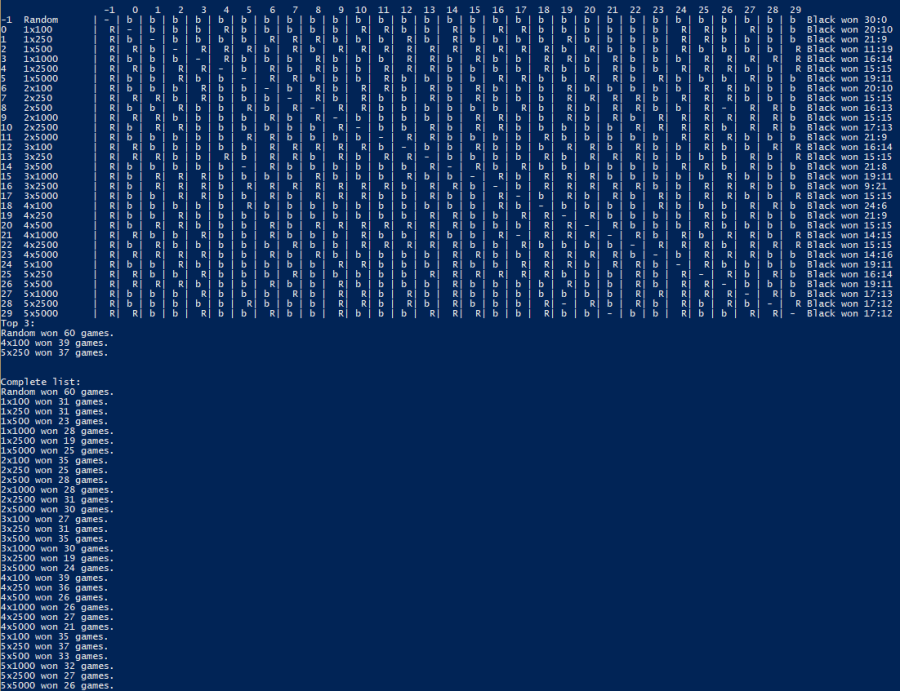

Yesterday I ran a few more rounds of training. Here are the results:

One thing I’ve been wondering about is, because I am able to complete a training session so quickly, whether I should either increase the size of the training data set, or increase the number of epochs I train for.

For now, I’m going to leave things as they are, as each round should (in theory) provide better training data. Either increasing the size of the training set or the number of training epochs would mean that the NNs learn the current data set better; however, iterating as I currently am, means the quality of the data set will improve. So I’ll wait until the effectiveness of the NNs improves before make either of those changes.